v-magine installation - network issues [closed]

Hi,

We've been installing v-magine on one of our hyperv node running windows 2012r2 std. So far, the installation worked, however, when we are trying to deploy VMs, networking is not working properly.

VMs are not able to reach the outside (eg: public network), however, VMs are able to see / ping each others.

The hyperv node has 2x NICs: - 1x OOB interface with public IP (no tagged vlan); - 1x external public network (no tagged vlan).

A /27 has been attributed to our public network, meaning we should be able to use that public subnet on any VMs running on that node.

The hyperv node has therefore 4x vswitches:

- LOCAL (internal), previously created;

- VRACK (external - should be the one being used by OpenStack), previously created;

- v-magine-internal (internal), created by the vmagine installer;

- v-magine-data (private), created by the vmagine installer.

Please note that VMs are getting the proper vswitch assigned:

PS C:\> Get-VM -Name instance-00000006,instance-00000007 | select -ExpandProperty NetworkAdapters | select VMName, SwitchName

VMName SwitchName

------ ----------

instance-00000006 v-magine-data

instance-00000007 v-magine-data

The 2 VMs are using the subnet / network created by the vmagine installer.

The current network has the following values:

(neutron) net-show 612ebe38-47bd-454b-84d4-61de3db8638d

+---------------------------+--------------------------------------+

| Field | Value |

+---------------------------+--------------------------------------+

| admin_state_up | True |

| availability_zone_hints | |

| availability_zones | nova |

| created_at | 2016-12-09T17:35:15 |

| description | |

| id | 612ebe38-47bd-454b-84d4-61de3db8638d |

| ipv4_address_scope | |

| ipv6_address_scope | |

| is_default | False |

| mtu | 1500 |

| name | public |

| provider:network_type | vlan |

| provider:physical_network | physnet1 |

| provider:segmentation_id | 535 |

| router:external | True |

| shared | False |

| status | ACTIVE |

| subnets | 7043c4ae-a7d7-40e1-8672-b0594e1f5e21 |

| tags | |

| tenant_id | 3b512d5359764ea1b018e413b580f728 |

| updated_at | 2016-12-09T17:35:15 |

+---------------------------+--------------------------------------+

And the current subnet values (please note that I have masked sensitive data):

(neutron) subnet-show 7043c4ae-a7d7-40e1-8672-b0594e1f5e21

+-------------------+--------------------------------------------------+

| Field | Value |

+-------------------+--------------------------------------------------+

| allocation_pools | {"start": "xxx.xxx.xxx.36", "end": "xxx.xxx.xxx.45"} |

| cidr | xxx.xxx.xxx.32/27 |

| created_at | 2016-12-09T17:35:19 |

| description | |

| dns_nameservers | |

| enable_dhcp | True |

| gateway_ip | xxx.xxx.xxx.62 |

| host_routes | |

| id | 7043c4ae-a7d7-40e1-8672-b0594e1f5e21 |

| ip_version | 4 |

| ipv6_address_mode | |

| ipv6_ra_mode | |

| name | public_subnet |

| network_id | 612ebe38-47bd-454b-84d4-61de3db8638d |

| subnetpool_id | |

| tenant_id | 3b512d5359764ea1b018e413b580f728 |

| updated_at | 2016-12-09T18:04:57 |

+-------------------+--------------------------------------------------+

I am not sure that I am missing, could you guys please help me out ? We are quite new to OpenStack and would definitely need some guidance.

Thanks!

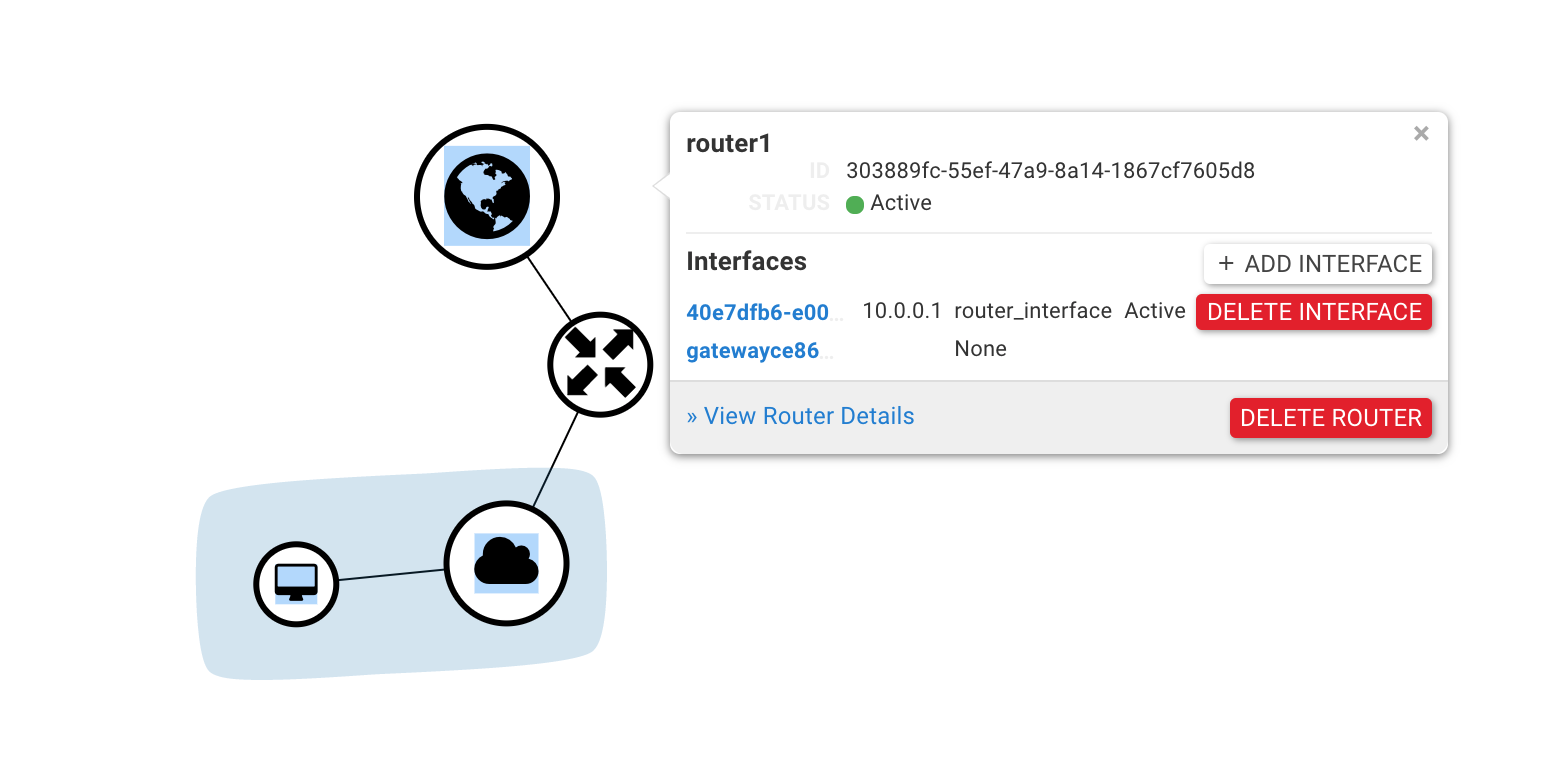

To begin with, let's ensure that the networking works as expected between VMs and Neutron: are the VMs getting an IP via DHCP? Can you ping the internal router IP from the VMs?

Hi Alex, Those VMs are getting the proper IPs assigned from the DHCP service. However, I don't seem to be able to ping the internal gateway (network:router_gateway). I can ping VMs from/to VMs fine tho. Please let me know if you need any further information / configuration snippets. Thanks!

From inside the VMs, you should be able to ping the gateway 10.0.0.1 and DHCP server (usually 10.0.0.2), can you please confirm if this is or not the case? Thanks

Actually, those VMs are getting a floating / route able IPs assigned, not the private 10.x.x.x subnet I'm still able to ping the DHPC service at x.x.x.38 (routeable IP) From neutron, I'm able to ping the router gateway: ip netns exec qrouter-5e279681-fd39-4f0b-9ecc-36a66abd246c ping x.x.x.34

FYI: I'm deploying those VMs in the "admin" project for testing purpose. I'd like those VMs to use external IPs to reach the outside.